Unified Sampling

For the layman, unified sampling is a new sampling pattern for mental ray which is much smarter than the older Anti-Aliasing (AA) sampling grid. Unified is smarter because it will only take samples when and where it needs to. This means less wasted sampling (especially with things like motion blur), faster render times, and an improved ability resolve fine details.

Technically speaking, unified is Quasi-Monte Carlo (QMC) sampling across both image space and time. Sampling is stratified based on QMC patterns and internal error estimations (not just color contrast) that are calculated between both individual samples and pixels as a whole. This allows unified to find and adaptively sample detail on a scale smaller than a pixel.

Advantages of unified sampling:

- Fast ray traced motion blur. Also motion blur should be smoother and require less memory.

- Fast ray traced depth of field.

- Better at picking up small geometry and detail.

- A foundation for future rendering techniques and materials.

You SHOULD use unified sampling. Here is how…

How to Enable Unified Sampling (Prior to Maya 2014 without the Community UI)

Unified sampling is not exposed within Maya but can be enabled and with string options.

“unified sampling”

- Enables or disables unified sampling.

- boolean, defaults to false

Note: You should use “Raytracing” with regular and progressive unified, NOT the default of “Scanline” (Render Settings > Features > Primary Renderer). This is because the scanline mode is old, deprecated, and none of the cool kids are using it. Switching to raytracing from scanline can take minutes off your render while reducing memory usage. Using “Rasterizer” with unified sampling enables the unified rasterizer.

Unified Controls

In addition to performance improvements, unified sampling simplifies the user experience by unifying the controls for various mental ray features including progressive rendering, iray, and even the rasterizer. For regular unified and progressive unified, you basically just have one control that you need to consider: Quality.

“samples quality”

- This is the slider to control image quality. Increasing quality makes things look better but take longer.

- It does this by adaptively increasing the sampling in regions of greater error (as determined by the internal error estimations mentioned before).

- You can think of quality as a samples per error setting.

- Render time increases logarithmically with “samples quality”.

- Generally leave it somewhere between 0.5 (for fast preview) and 1.5. 1.0 is deemed “production quality”. You can go higher (or lower) if the situations demands it. Possibly values are 0.0 and above.

- scalar, defaults to 1.0

Additional Controls

“samples min”

- The minimum number of samples taken per pixel.

- Set to 1.0. Don’t change this unless you have a pretty gosh darn good reason.

- Using a value less than 1.0 will allow undersampling. This could be useful for fast previews.

- This is only a limit. Use “samples quality” to control image quality.

- scalar, defaults to 1.0

“samples max”

- A limit for the maximum number of samples taken per pixel.

- The default setting of 100.0 will generally provide you with a large enough range of adaptivity for production work. Some situations, such as extreme motion blur or depth of field, may require more than a hundred samples per pixel to reach the desired quality and to reduce unwanted noise. Setting “samples max” to 200.0 or 300.0 in these situations can help.

- This is only a limit. Use “samples quality” to control image quality.

- scalar, defaults to 100.0

“samples error cutoff”

- Provides an error threshold for pixels that, when error falls below this value, mental ray will no longer consider.

- This is only useful for scenes with very high quality values (like 3.0+) where areas of low error are still being sampled/considered.

- In general, use “samples quality” as the sole method to control image quality and leave “samples error cutoff” at 0.0 (disabled).

- If you choose to use this setting, set it to a very low value like 0.001.

- scalar, defaults to 0.0

“samples per object”

- Allows you to override “samples min” and “samples max” on the per object level.

- If enabled, overrides are set under the mental ray section of each object’s shape node.

- “Anti-aliasin Sampling Override” is the override switch

- “Min Sample Level” sets “samples min”

- “Max Sample Level” sets “samples max”

- Note: these values have different meanings for unified sampling than they do for AA sampling. i.e. a value of 3 corresponds to 3 samples per pixel, not 2^(3*2) = 32

- boolean, defaults to false

You can learn more about using Unified Sampling in the Unified Sampling for the Artist post.

Additional Notes

When unified sampling is enabled, mental ray will ignore certain settings:

- The AA settings (ie min sample level, max sample level, anti-aliasing contrast) are ignored because you are now using the new unified sampling pattern.

- Jitter is ignored because unified is inherently QMC jittered across image space.

- With motion blur enabled, time samples/time contrast (these are the same setting) is ignored because unified is also QMC jittered across time. You still need to set motion steps for motion blur.

Quality and error cutoff can be set to per color values for RGB. This is probably overkill for 99% of situations. If you wish to control per color values, you can adjust the string options to look something like this:

- “samples quality”, “1.0 1.0 1.0”, “color”

- “samples error cutoff”, “0.0 0.0 0.0”, “color”

Posted on October 31, 2011, in maya, unified sampling and tagged maya, mental ray, unified sampling. Bookmark the permalink. 75 Comments.

FFfffff Yeah!

I’m so there… Thanks guys!

good article and great site,

but pls cutout all the recommendations for sampling values, like 100 for max sampling, or that 1,5 quality means production quality.

try to render mb or dof or glossis then you will see that none of these values are enough to render good quality. for example, a value of 10 for strong dof is easily a must to get rid of all the sampling artifacts. glossis are also a different thing in combination with dof or mb, 100 as max are to low, 200 or 300 as max is normal here.

but if you get good quality out of your settings, pls show some examples here.

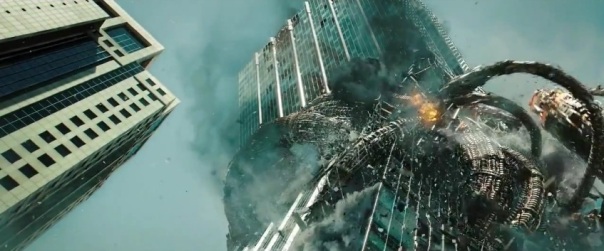

would be also great to know what settings ilm used for the driller, cant imagine, but they must be insanely high. 😉

Hi Kzin.

The values we are talking about are values that have been used for some of the productions we have been a part of or have direct knowledge of. So these are based on actual finished products. Also, during down time, jobs are sent to the farm to test different features and methods. This means we can leverage hundreds of machines to run iterations over and over again until we find a setting we like. This doesn’t mean that all settings work for every scene. But they are a good place to start. The alternative is to simply not comment on the settings we have used and let you find your own, but that’s not very helpful as a starting place is it?

We will be posting some examples here too. Just takes awhile to get assets that can be shared. We spend 11 hours a day on things we can’t take home. 🙂 And assets generated by plug-ins like Onyx Tree are also forbidden to share.

To tune a scene for a completely artifact-free still image will indeed take some time. But we are focused on VFX here which has different sensibilities. A lot of the projects we work on are designed to match a filmed plate (either film or digital) which means there is inherit grain we must match. So to render a frame that has no grain and then re-grain it is wasted time (many of these are re-matched to the grain automatically by an artist). The vast majority of what you see in a film is grainy. But you don’t see it because it’s in motion. Perceptually this detail is lost. We also find that people tend to enhance their motion blur to levels that are not realistic by multiplying it. This might look “cool” but the motion trail isn’t correct. When matching for filmed elements it’s generally much shorter than most people realize.

As for ILM, I believe their Quality with Unified was set to 3.0 to ensure that they caught all the tiny debris in the shot. In a scene where I may not have tiny parts of buildings flying around I can get away with less. Just to illustrate. I do not have hard numbers on their samples settings.

thanks david,

yes, film is different because of the noise (and there can be alot of it, deathly hollows for example, of course its film noise, so its not that bad 😉 ).

i could not believe the driller was rendered with mr, thought that ilm uses renderman only at the moment for their hero shots.

i did some tests with unified in the last couple of days, its way better then adaptive in terms of detail catching and rendertimes.

i think the problem was that i tryed to render smooth dof and this is nerly impossible with good rendertimes (as i wrote in another forum, looks like unified and dof bokeh shader are “disconnected” at the moment?). mb is the opposite because you can get clean results with comparable good rendertimes. so i hope dof will also benefit from unified in the near future.

i did a test yesterday with unified and mb, can be seen here:

http://s7.directupload.net/file/d/2718/rb6zfees_jpg.htm

rendertime is not that bad for all the sampling in the scene and its clean, i think cleaner as you would need it for film.

The aspect of new features that many people miss is that there is a law of diminishing returns for features. Each feature has a different threshold. Newer features tend to extend that threshold to favor more complex scenes and effects that were previously prohibitive. Something I will go over later in more detail, but simply put: To achieve the same level of quality in a complex scene with regular adaptive rendering (AA) should take longer than using Unified Sampling (all else being equal).

So to get a similar frame in something complex from regular AA will be more expensive than Unified Sampling.

Another example is running FG with a complex lighting setup. You will get a lot of disco ball effects that are hard to clean up. Use Irradiance Particles that are “slower” and you end up with a correct result immediately. To clear up that variance in FG would take longer to do than using IP. So your “better” original method is now too difficult to use in something more advanced.

The bokeh shader is indeed compatible with Unified Sampling. To get an idea of what kind of DOF you need, make the bokeh samples ‘1’ and render with Unified Sampling and Progressive “on”. Do not use the progressive controls, just set Unified Sampling Controls to your guesstimated Quality and max samples and Progressive “on” and render. Lower the local sampling on objects in the area of most confusion (blurriness) since there will be no or little detail (things like glossy rays, occlusion rays, etc.) Then render. The first pass should be fairly quick and give you a rough idea of what the image will look like when converged. DOF is expensive, but it should be less expensive in Unified Sampling than regular AA.

I will explain more of this later with images, settings, and diagnostics (very helpful diagnostics come with Unified Sampling by the way.) Hopefully in the next week or so I can generate a post with Unified Sampling explanations in a visual context.

Log in to the ARC forum and look here: http://forum.mentalimages.com/showthread.php?8296-Mental-Images-User-Group-Gallery&p=36025#post36025

The lighting was partially brute force (builtin IBL) Glossy reflection and SSS.

unified does a better job. in my little example all local sample values are set to 1.

its the best way to render gloosis and area lights.

glossis are the best way to show what unified can do because of the better detail catching. its not possible to catch the details with adaptive, you would end up with 256 or 512 local glossi rays which would slow down the rendering alot.

i will try the progressive mode, i avoid this normally because there is no framebuffer support, or is this different with unifed progressive (did not test this combination)?

Progressive isn’t meant for final renders, it’s a way for you to tune and test your scene. Once it’s where you want it to be, turn Progressive “off” and render. The Unified Controls will mean you should get a similar quality but now your renders will contain your passes, etc.

Hey Kzin,

I have an idea why we have different expectations of Quality. In many cases I have not completely abandoned the original ways of using local samples. I have decreased them for Unified Sampling but not dropped them to ‘1’ for everything. This is because local samples have an added efficiency in many cases like soft shadows.

However, in your scenario where everything is set to ‘1’ you will not only need more Quality, but you will need more samples as well. If previously a pixel was taking 5 samples and the shader sent 64 reflection rays each time, then I had 320 rays generated (assuming no additional ones were spawned by struck objects). Now, at local samples ‘1’ I would need about 320 samples from Unified Sampling (actually, less but I’ll get to that). Since the default “samples max” is set to 100, your result was unsatisfactory. You would indeed need more than that.

I will cover more of this in a post I am working on right now. But your explanation of what you were doing made a little light go on in my head. You’re somewhat further ahead of the game than I originally thought. 😉 It’s a shame we aren’t using BSDF yet because your expectation would have been spot on.

Thanks!

Hi Kzin,

Thanks for your comments! I have adjusted my recommendations to accommodate greater quality and max samples for situations that produce a lot of noise. Still, I feel the the ranges I have provided are an excellent place to being 🙂

@David:

after some more deeply tests with unified i think its a good way to set local sampling to 1 and let unified do the rest. you wrote in some posts in the arc forum that unified dont need a lot local sampling and in my early test i did the same experience.

but some time ago, as vray open beta starts for xsi some comparisions shows that dof rendering is alot more complicated to solve in mr then in vray. so i started to do some more tests. and here i got a problem (and thats because i thought dof is not connected with unified). if you increase local sampling for dof, but render with unified, you suffer alot of rendertime compared to 1 dof sample and high unified settings. the same for strong diffuse reflections, its better to let unified all the sampling because the local sampling gives you not the same details as high unified sample rates. if you use local glossi samples in the mia_mat, it looks like mr is not using the unified that resolves more details, it looks like mr uses the old “adaptive” aa that cannot solve the same details like unified and this ends up in way to noisy reflections (dont know how i should describe it in another way).

so with higher local samples i could not get clean results with the settings you suggested and beause of this i came up to use only 1 sample for all local effects and it worked quite good. another sideeffect of this is that you dont need to tune local samples until the noisequality is good enough, you only set the min/max and thats it. the idea comes indeed from bsdf, yes, because there is no local sampling here which makes things alot easier.

@bnrayner:

i wrote this because the sampling of unifed is a bit different and alot of people want to get clean, noisefree results, especially in comparision with other renderers. if they use “only” 100 samples as max and they dont get that clean results then you might get the typical “new feature with bad quality” postings all around. 😉

and because of this, of course not only of this, the site and its intention is great.

Hi and thanks for your blog.

I’ve made a script to add string options:

http://forums.cgsociety.org/showthread.php?t=971719

I think it’s easier.

Hope this can help. 🙂

Regards,

Dorian

hi master thanks for the support…

one question…

so as i understand i need to change manually one per one the settings in my materials and lights???? Unified doesnt override theirs sample values as do with AA and jitter????

and finally, unified works with IP, IBL and importons settings?? it works in DBR or backburner mode???

thanks and congratulations for your great work.

For shader settings you can either change them manually or you can script it. In some cases you can alter the .mi include file where some default values are found and it will be permanent when you start up Maya.

Unified Sampling does not directly alter samples of anything. It simply samples the image in a smarter way. It is also naturally jittered from the QMC pattern.

It works will all of the indirect lighting methods and in fact makes it easier to use brute force indirect lighting for high quality frames. It should also work with Backburner once it’s saved in the scene file.

For now Unified Sampling is NOT supported for DBR (distributed rendering per tile).

Great tip.. override the .mi file

I make a test in a production work but i obtain bad results… a lot of noise… the same scene without unified have cool glossiness reflection…

Rendertime was: with unified 57min , without unified and higher values in samples 1:30h

My scene is in 3dsmax design 2012-64bits and i use mr option manager script to available unified, ibl, importons and ip.

My workflow is the same as i read in the post and comments… put 1 in samples values for glossiness in all my material, for ibl,ip and importons i leave the defaults values..

I will try with other scenes and then post my results.

any idea of why is this not working in maya 2012 x64? i add the string but absolutely nothing happens… any help? thanks

Make sure the rasterizer is off

Hi David,

firstly thank you for the super informative blog, i stumbled across it the other day and have been `consuming ` the info since. This `sudden` improvement in the Mental Ray arseanal comes at a crucial time as was seriously considering moving to a different renderer for our small studio.

I`ve tried the userIBL shader which seems excellent. I am keen to try the FG shooter also.

Am trying unified rendering and feel rather foolish as i can see the improved resulting render..AA sampling has no effect so i know it`s working but i just can`t see where the sampling controls are located !? could you please point them out to me?

Thanks a lot for your efforts,

all the best Giancarlo

Hi Giancarlo,

Unified Sampling does not use the standard AA controls. It must be added using string options. I assume if you’re using the user_ibl that you are on Maya 2013? Make sure you have the latest service pack (SP1) as well.

Unified can be enabled as described in Brenton’s post above or you can use one of the many UIs floating around to expose it. We are currently working on a new UI for Quality and render settings in Maya. You can see the code here:

http://code.google.com/p/maya-render-settings-mental-ray/

And the discussion here (once added to the forum):

http://forum.mentalimages.com/showthread.php?9771-Maya-options-UI

Under: trunk>modified you may find some help scripts to look at.

Otherwise your controls will reside in the String Options as shown in this and the other Unified Sampling posts.

Hi David,

thanks for the swift reply. Yes I am using 2013 SP1. I do have unified sampling enabled via string options but still nowhere do i see the new `unified controls` with the ability to change `samples quality`, nor do i see `additional controls` as described by Brenton above in the post. Should these controls become visible in the miDeafultOptions dialog box once unified sampling has been enabled ?

cheers Giancarlo

Ok, after downloading Dorians` script i can see now that i needed to add extra strings for all the `unified sampling options` such as `samples quality`, `samples error cutoff` e.t.c .

I had wrongly presumed by simply `turning on ` unified sampling these options would appear.

Thanks again for your time David,

Giancarlo

Check the new blog post for the current UI, it’s in testing phase. Give it a shot and let us know how it goes!

Hi David. I`m trying out the script which seems to work great and is intuitive to use. My question is if i`m rendering a scene using multiple workstations here at our studio would i need to install the script on all the machines ?

Hi Giancarlo,

For mulitiple work stations you would have to move the script in where all the other mental ray ui scripts are. This will be in the Maya install location, For example on linux it are located here:

{maya install location}/maya2013-x64-SP1/mentalray/scripts

Keep in mind that you need to replace {maya install location} with wherever you have it installed.

Make sure you copy the original files to a backup location. For example on linux (note: you will need to have root permissions):

cd {maya install location}/maya2013-x64-SP1/mentalray/scriptscp mentalrayUI.mel mentalrayUI.mel.backup

cp {new ui location}/scripts/mentalrayUI.mel ./mentalrayUI.mel

You will have to do this for each of the new files (not just mentalrayUI.mel). I actually used soft link instead of a copy to install the new files. Now it is easy to replace the files and I don’t have to mess around in the Maya install too much.

ln -s {new ui location}/scripts/mentalrayUI.mel mentalrayUI.melAt least that is one way to do it.

Also, if you’re just rendering on nodes, those machines do not need the script, string options are saved with the Maya file.

However, if you do not have a script saved on a workstation and open a file, you will not see the options enabled/disabled unless you look at the miDefaultOptions. This can cause problems later if you do not know what was saved with the file.

Excellent, thank you both for the info. I`ll give it a try. They`re not heavy scenes but i`d like to use unified with reaflow and dialectric material, rendering over the network. cheers, Giancarlo

I might avoid dialectric, it’s an older material. mia_material might work better. But sometimes the dialectric will be faster for inexpensive water/glass effects. Just keep in mind it’s deprecated.

ahhh good point, will go MIA, cheers

Hi, I tried all 3 scripts linked here. I have a few questions though,

1) can you use the “enjoy mentalray stringoptions” and together with mr-rendersettings .2? I find that the image based lighting options, miscellaneous and final gathering legacy inside the enjoy mentalray string options may be useful options to use that is not available in mr-rendersettings .2.

2) miupdatestringoptions.py how do i load it up and what does it do? I can’t seem to find any code i input inside python to load it up?

3) whats diff between mr-rendersettings .2 and .1? I currently have .2 installed. also i find that enabling unified sampling in render globals using the mr-rendersettings script, did not create any string at all under midefault options, would this be ok?

And lastly mentalcore already has unified sampling build into it and i was wondering if i would still need these scripts to use unified sampling over that one?

The reason why i wanted to use unified sampling is because i wanted to try if it would solve my banding problem located here http://i1124.photobucket.com/albums/l567/Jinian15/bandingprob.jpg

I have tried all the unified sampling options including progressive, but all it does it reduce the grain and noise of my render but not the banding. My monitor is properly calibrated, and i am using a linear workflow in maya.

Thanks, hope someone can help.

Your banding issue might be related to insufficient bit detph in either your rendered image or viewer. Render to OpenEXR and make sure that the bit depth is at least 16 bits in both render settings (Render settings > Quality > Framebuffer > Data Type) as well as the render view (Render View > Display). You may need to restart Maya.

Yes i have tried that. It rid a lot of noise and distortion caused by the banding, but it is still there, just more faint.

If you are using mental core then you should stick to that UI and not another. Corey already maintains that UI with modern features. Our UI is for customers without a modern solution like mental core.

This may be why you are experiencing some trouble. I would simply use the mental core UI.

1) I would not use “enjoy mentalray stringoptions” at the same time as the test UI found here. They may both alter the same string options without checking what is set by the other.

2) Leave it in the folder and use as is for now, don’t load it directly into Maya.

3) 0.2 adds some functionality and checks. I would use the most recent.

Sorry for the confusion, but i dont actually have mental core installed. I was just curious if you could use that one together with the new UI here. I also disabled enjoy mentalray string options and deleted the script from my folder so that i only use the mr-rendersettings .2 UI

The problem is I don’t know what to do with the miUpdateStringOptions.py script that is part of the mr-rendersettings .2 package. I don’t know what it does nor know how i can call it into maya. But so far my unified settings are working anyways when i use it. I still prefer the default adaptive settings better though since i can get about the same quality render as unified settings but twice as fast.

I never used the progressive options though from the new UI, i also dont use contrast as color, samples per object, and leave all my error cutoffs at 0 under additional sampling.

Just so it’s in a couple places:

Unified Sampling speed decreases in many cases where your local samples are increased. Unified Sampling is designed to intelligently pick away at the scene, occasionally finding areas where pounding a pixel will remove noise. But in doing so you need lower samples, not higher, to let Unified make a better decision on when to stop.

I would also observe that this scene isn’t very complex right now. Unified render times are less beneficial for this. Scenes you may have avoided doing before because of complexity and render time should now be easier with Unified Sampling. So take a leap of faith and try a few complex scenes you’ve shied away from; after learning some more basics of how it works.

I recently rendered a scene (that may be released soon, I will share if I remember later) with upwards of 40 area lights and animation in about 40-50 minutes a frame at 1080HD resolution.

For the love of everything holy, I can NOT get this to enable even though I’ve tried a dozen times.

You need to be running at least mental ray 3.9. Preferably 3.10.

i.e. Maya 2012+

When I load the script I get and error,

“# Error: file: /Users/Shared/Autodesk/maya/scripts/mentalrayUI.mel line 1979: ImportError: file line 1: No module named miUpdateStringOptions #

”

I have the miUpdateStringOptions.py saved in the same location as the other mel scripts. Is there something else I should be doing that I am not?

I didn’t realize that I needed to rewrite the original scripts in the Autodesk dir. Looks like it is working now.

No, actually you should not rewrite the Autodesk scripts. (Hope you have backup just in case.)

If on Windows, place them here: C:\Users\[UserName]\Documents\maya\2013-x64\prefs\scripts

Where [UserName] is your account. This will automatically override the settings when opened without you changing system files.

Hey great stuff fellas!

I have started doing some tests with basic scenes. I have followed your settings for lights and shaders but my render times seem to double. It’s working but I’m not getting render time improvements. Maya 2012. Is there some thing I’m missing?

Is the scene sufficiently complex? Not something like sphere on a plane? Or maybe a low detail scene?

Well I used it for a room interior and a scene with a plane and 2 spheres and both times it was around double than the standard workflow. 1/100 1 quality. I’m guessing u should see an improvement in times?

Any trivial scene isn’t going to benefit immediately from Unified Sampling. Anything difficult to render or long to render should see gains when using Unified Sampling AND brute force techniques. So your spheres are not going to be faster most likely. And arch viz renders typically rely on a lot of clean spaces where sampling is at a minimum.

If you want to see Unified Sampling really shine, do an animation and move the camera. Leave motion blur on too.

As for most scenes, the current trend in rendering is easy setup at the cost of rendertime. But where Unified does a better job is that complex scenes begin to render more quickly with easier setup instead of slowing down. If you look at the Unified Sampling Redux article, you’ll see that despite the change in settings on the spheres, the overall quality is very similar. This means no more tweaking smaller parameters or individual shaders anymore. Simply tweaking Quality should be enough. mental ray will spend time where it needs to.

Regular old adaptive sampling will still be better at resolving certain details than unified sampling. For instance, straight edges may render faster because the ridged sampling grid is more likely to line-up with the geometry’s structure. Unified sampling will be more efficient at rendering complex effects naturally require more samples. 3D motion blur, 3D depth of field, and wide gloss reflections/refractions will all perform better with unified sampling.

Hey guys, Love this site. I reference it all the time and I really appreciate seeing some light through all the fog that is MR. I’m still pretty much a noob when it comes to photo-real and higher end rendering, but I have the desire to learn. I’m using Maya 2013 right now and used the select -miDefaultOptions; to enter the boolean values for unified sampling. How do I know it is on and working? This may seem like a stupid question but I feel like I might be missing something important. I see no options for quality or to change from adaptive to unified under the render settings? Do I need to install any special libraries? What am I doing wrong? Thanks so much for your time.

-JJ

If you’re using a more recent Maya 2013, try the Community UI found here: https://elementalray.wordpress.com/2012/08/10/new-maya-rendering-ui-testing/

There’s also a version for 2014

Thank you so much for the reply. I will check this out straight away! 😀

i think i made the exact same question back then when i started to use Unif.Sampling… and after cursing in some languages i found the answer: go to the sampling options in quality tab and put something like -2 -2 in there… obviously the render should came out overly blocky, BUT, if you have unif. sampling ON you should see it nice and clean. So, thats the way you know its working, just undersample it like hell and you will see that the actual sampling will come from the unified sampling and NOT from the classic sampling method. Hope it helps!

Thx. I will test that for sure. It definitely does help! 😀

Hi, i´m using user ibl env with unified sampling and i love it. But when i apply fur grass, seems that don´t catch light, everything is fine, but the grass is dark. This is a bug or any trick 🙂

thanks

What shader are you currently using for the hair? Is this Paint FX hair? Fur? mia_material?

The issue is almost certainly with the shader. The light loop must sample all lights for hair primitives, which your standard material shader does not do.

i.e.

for (mi::shader::LightIterator iter(state, state->normal, -1); !iter.at_end(); ++iter) {//etc...

Hi, i´m using fur grass…

thanks

How can i fix this?

The shader being applied to the fur grass doesn’t support that lighting mode yet. (The environment light is not integrated)

Which version of Maya are you using?

You may be able to replace the shader being used with something like the mia_material using this code:

addAttr -ln "miExportMaterial" -at bool -defaultValue 1;addAttr -ln "miMaterial" -at message ;

Attach the shading group node of the replacement shader to this connection.

Hi, excuse my ignorance but I do not understand very well how to attach the shading group node of the replacement shader to that connection…what I did was, create a mia_material x and selected is Shading Engine, and copy/paste your code to Mel command line. But the only thing that happened was appearing in the Extra Attributes of the Shading Engine, – Mi Export Material “checked”!!

My version of Maya is 2013, but in the next week arrives 2014 🙂

Thanks once again

You should run that script for the hair shape, then attach the SG group message of the mia_material_x to the fur where that attribute exists.

Perfect…thank you

Pingback: Linear Color Workflow(s) in Maya « elemental ray

Pingback: Linear Color Workflow(s) in Maya – Part 2: The Preferred Method « elemental ray

Pingback: Unified Sampling – Visually for the Artist « elemental ray

Pingback: Your car was built in mental ray « elemental ray

Pingback: Unified Sampling – Visually for the Artist | Pixel

Pingback: Rendered with mental ray « elemental ray

Pingback: “My render times are high? How do I fix that?” « elemental ray

Pingback: The Native (Builtin) IBL « elemental ray

Pingback: Maya 2014 – mental ray changes | elemental ray

Pingback: Object Lights – mental ray 3.11 | elemental ray

Pingback: How to? ( Unified Sampling ) | Sandeep Kittur

Pingback: MILA New Features – May | elemental ray

Pingback: The World of Rolex | elemental ray

Pingback: Like a Boss | elemental ray

Pingback: Mental Ray Unified Sampling in Maya 2014 | Aqs

Pingback: Maya 2015 – mental ray changes | elemental ray

Pingback: Unbiased AND Biased rendering | Tenpa Tenpa, The nomad